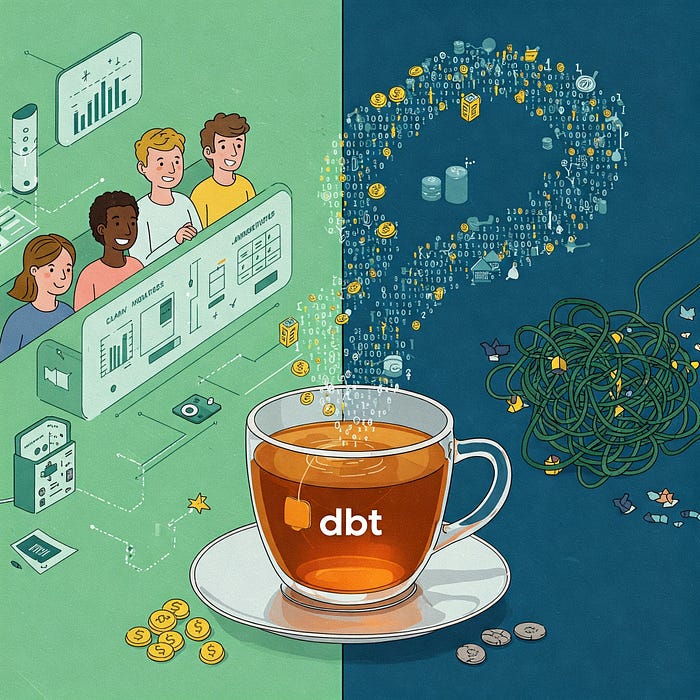

Data build tool — ‘dbt’ is not everyone’s cup of t(ea)

dbt (data build tool), born in dbt Labs in 2021, has quickly become the go-to solution for turning raw data into trusted, analysis-ready insights. But what came before dbt? Teams relied on fragmented SQL scripts, clunky ETL tools like ‘not-naming-names’ (you know who you are), or stored procedures buried in databases — methods that often led to spaghetti code, poor version control, and endless maintenance headaches. With dbt Cloud, teams can centralize their data logic, ensure quality through built-in testing and documentation, and ship data products faster by leveraging modular, reusable code. Big names like Nasdaq, HubSpot, and Virgin Media swear by it, and it’s easy to see why — dbt helps teams reduce costs, speed up pipelines, and make data-driven decisions with confidence. But here’s the million-dollar question: Is dbt really for everyone? Sure, it’s powerful, but does its steep learning curve and niche appeal make it a risky bet for some teams? Let’s dive in and find out.

Him: I don’t understand why you chose “dbt” for the data warehousing project when you know it has serious limitations. The project will certainly sink just like the Titanic. A huge (titanic) mistake in my opinion.

Her: Okay! I think you have made up your mind. Can you help me understand how you got to this conclusion?

Him: It lacks where most tools shine. For example, being able to use just “SQL” or “Python” as its language. Who wants to learn a totally new language for doing the same transformations that can be done quite easily in “SQL” or “Python”?

Her: dbt’s strength lies in its ability to use modularity, just like in any programming language that uses functional paradigm — even Python. With modularity you are not just gaining better control on structure and organization of the code, but also benefit from its code reusability. It’s more value per lines of code written. It’s pure genius in my opinion.

Him: That’s not my point! I question the use of a new language and it’s steep learning curve. It feels like rock climbing on Yosemite’s Half Dome. Unlike the wide spread use of SQL or Python, I don’t see much ROI on learning this new skill. It’s neither an easily acquirable skill nor are there enough developers who are good at it, making it a niche overall. Building a solution that relies on niche skills, makes it highly dependent and difficult to maintain when the solution grows. Can you imagine the number of developers it would take to maintain it, who are difficult to find and retain?

Her: Without understanding modularity and code reusability, it’s hard to justify the value of learning “Jinja SQL”. Although the output of the dbt code is SQL which is procedural by nature but it’s how we get to it that matters. Imagine a decade old project that has a bunch of databases, with many more schemas and countless stored procedures with 1000s of lines of code. You know the challenges of operating, maintaining and delivering value through such large data systems. dbt can be a life savior here. It smartly leverages the concepts from the software engineering world like version control & CI/CD, modular code, scalability & cloud-native compatibility, and much more, which makes it ideal for implementing data transformation solutions at scale. The rock climbing is well justified here, and isn’t the view from the top of Half Dome quite spectacular?

Him: I see your point but I worry about the availability of this niche talent in the market. Don’t you think we are increasing the risk by many folds? It’s like building Astronomical clocks.

Her: You are missing the bigger picture here. Do you understand what makes a data project successful?

Him: Yes of course! Good quality data that is valuable to the business.

Her: There are many factors that contribute to a data project’s success and value. In general, high quality data in a resilient architecture that is discoverable, available and securely accessible. Failure in any of these areas drastically brings down the value of the data being served, resulting in partial to total failure. dbt by design addresses some very important data management and DataOps issues. Built on DataOps principles it includes characteristics like tighter control on data quality using dbt tests, such as data freshness checks, inbuilt change data capture for incremental modeling, data lineage derived from referential integrity while building, excellent documentation generated from metadata using yamls, environment management with isolated development, change management with proper version control, and scalability through cloud native architecture. Put all this together and you have a perfect recipe for success. Do you see the big picture now?

Him: Yes, a perfect recipe for delays and higher time to market!

Her: The initial investment in setting this up is higher because the multi-faceted approach it takes but the end product is higher up in the value chain. You’ll notice the cycle times reduce as the solution builds on and in the medium to long run what you have built is sustainable, scalable and more importantly valuable.

Him: Why not use Databricks instead of dbt to get the job done? I’ve got my two biceps ready to flex — left arm SQL and right arm Python.

Her: Databricks? Absolutely! It’s a great platform for data engineering and machine learning combined. Databricks excels at handling large-scale data processing and machine learning workflows, but it can be overkill for teams focused solely on SQL-based transformations. dbt, on the other hand, shines in its simplicity, modularity, and ability to integrate seamlessly with existing cloud data warehouses. Besides, it’s inappropriate to send a heavyweight contender to a lightweight championship match — though it might be fun to watch!

Him: Are you telling me that dbt has ‘transcended from the heavens to save humankind from imminent apocalyptic turmoil’?

Her: No, that’s not what I’m saying. Of course, there’s no one-size-fits-all. It all depends on the use case. It is a powerful tool but applicable to a warehouse setup with relational modeling. There is a world out there that has non-relational data from no-sql data sources, systems that require integrations and interoperability, ad-hoc or small scale projects, real-time data processing solutions and finally strict budget projects as the dbt cloud expenses can easily add up. Like I said, it is powerful but not for everyone. It shines where it is applicable and worth the consideration. I’d even say the juice is definitely worth the squeeze. :)

Hope that was engaging enough to get my thoughts across. In summary, while the strengths of ‘dbt’ as a preferred solution are undeniable, it doesn’t apply to every situation. After all, not all soccer players turn out to be legendary tennis players — unless you’re Roger Federer, of course!

Have you been part of such debates? If yes, share your thoughts and experiences in the comments below!

Footnote: This article reflects my personal opinion and does not represent any association with an organization.